Seungjae (Jay) Lee

I am a Ph.D. student in the Department of Computer Science 💻 at UMD, where I am fortunate to be co-advised by Prof. Furong Huang and Prof. Jia-Bin Huang.

Prior to joining UMD, I earned my Master's degree in the Department of Aerospace Engineering ✈️ at SNU, where I was fortunate to be advised by Prof. H. Jin Kim. I also had the privilege of working at the Generalizable Robotics and AI Lab (GRAIL) 🤖 at NYU under the guidance of Prof. Lerrel Pinto.

Before that, I received Bachelor's degrees in Mechanical and Aerospace Engineering at SNU ⚙️.

"💻 + ✈️ + 🤖 + ⚙️ = Me"

Education & Affiliations

Ph.D. in Computer Science

Advised by Professor Furong Huang and Professor Jia-Bin Huang.

Aug 2024 - Present | College Park, MD

Visiting Research

Advised by Professor Lerrel Pinto.

Jul 2023 - Jun 2024 | New York, NY

M.S. in Aerospace Engineering

Advised by Professor H. Jin Kim.

Mar 2021 - Feb 2024 | Seoul, Korea

B.S. in Mechanical & Aerospace Engineering

Mar 2015 - Feb 2021 | Seoul, Korea

Research

My research interest is understanding the interaction between agents and environments, and devising data-efficient decision-making (or robot learning) algorithms, especially in the field of reinforcement learning (RL). Selected publications are marked with ★ below.

Seungjae Lee*, Yoonkyo Jung*, Inkook Chun*, Yao-Chih Lee, Zikui Cai, Hongjia Huang, Aayush Talreja, Tan Dat Dao, Yongyuan Liang, Jia-Bin Huang, Furong Huang (*equal contribution)

Under review

project website / arXiv

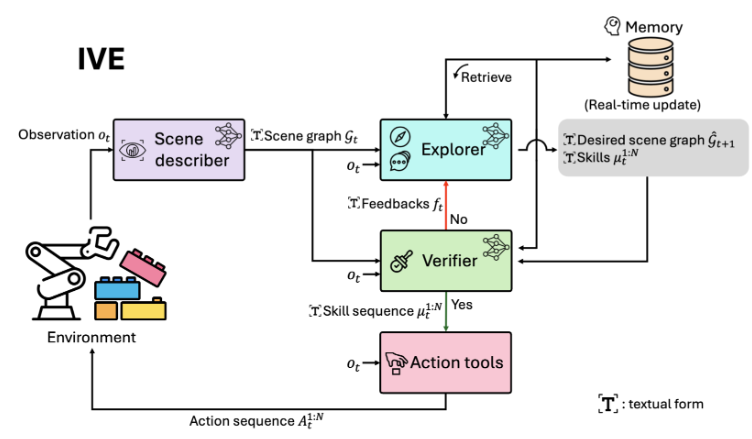

Zikui Cai, Shivin Dass, Seungjae Lee, Mingyo Seo, Kaushal Janga, Aadi Palnitkar, Tan Dat Dao, Ruchit Rawal, Mintong Kang, Ruijie Zheng, Kaiyu Yue, Bo Li, Yuke Zhu, Roberto Martín-Martín, Tom Goldstein, Furong Huang

Under review

Yuanchen Ju, Yongyuan Liang, Yen-Jen Wang, Nandiraju Gireesh, Yuanliang Ju, Seungjae Lee, Qiao Gu, Elvis Hsieh, Furong Huang, Koushil Sreenath

Under review

project website / arXiv

Inkook Chun, Seungjae Lee, Michael Samuel Albergo, Saining Xie, Eric Vanden-Eijnden

NeurIPS, 2025

arXiv

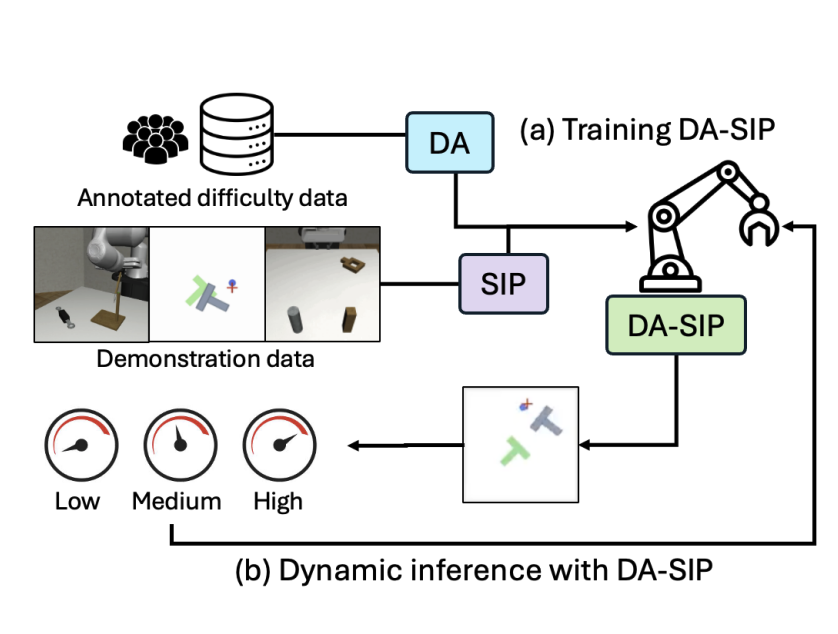

Seungjae Lee*, Daniel Ekpo*, Haowen Liu, Furong Huang†, Abhinav Shrivastava†, Jia-Bin Huang† (*equal contribution, †equal advising)

CoRL, 2025

project website / arXiv

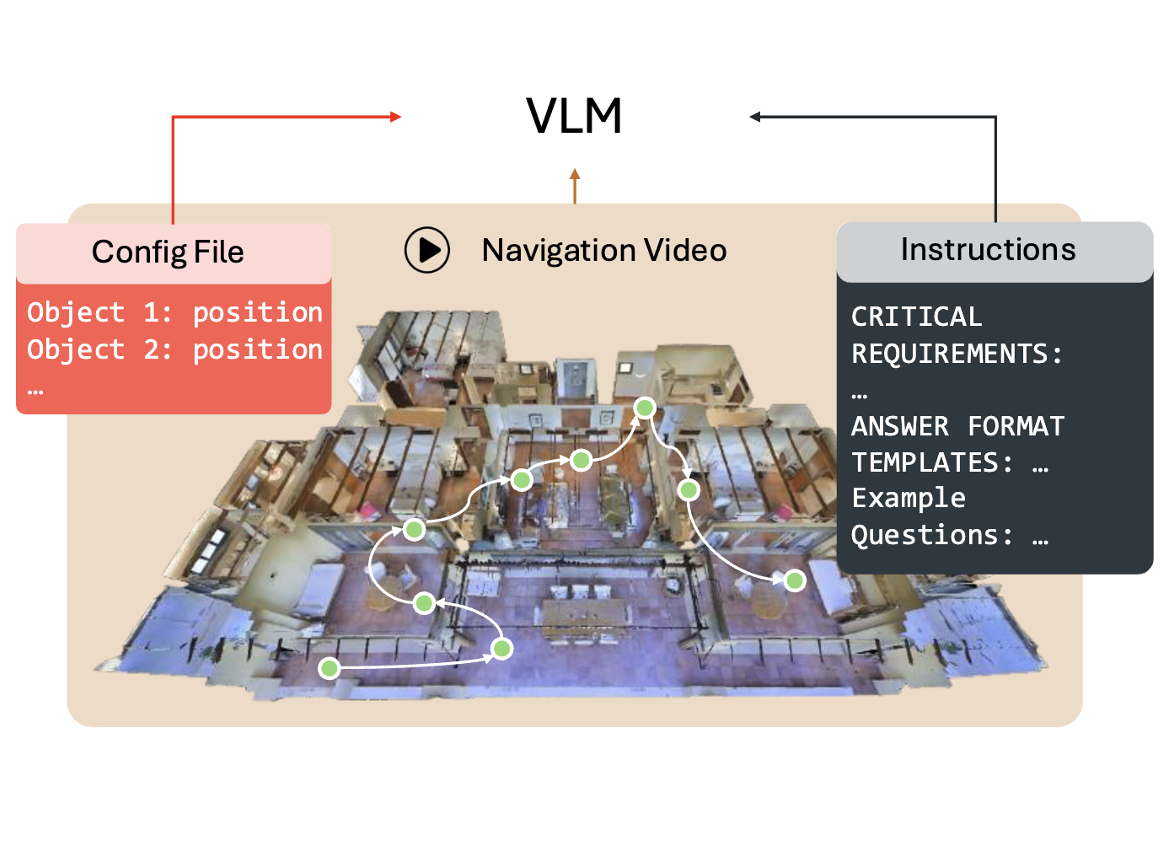

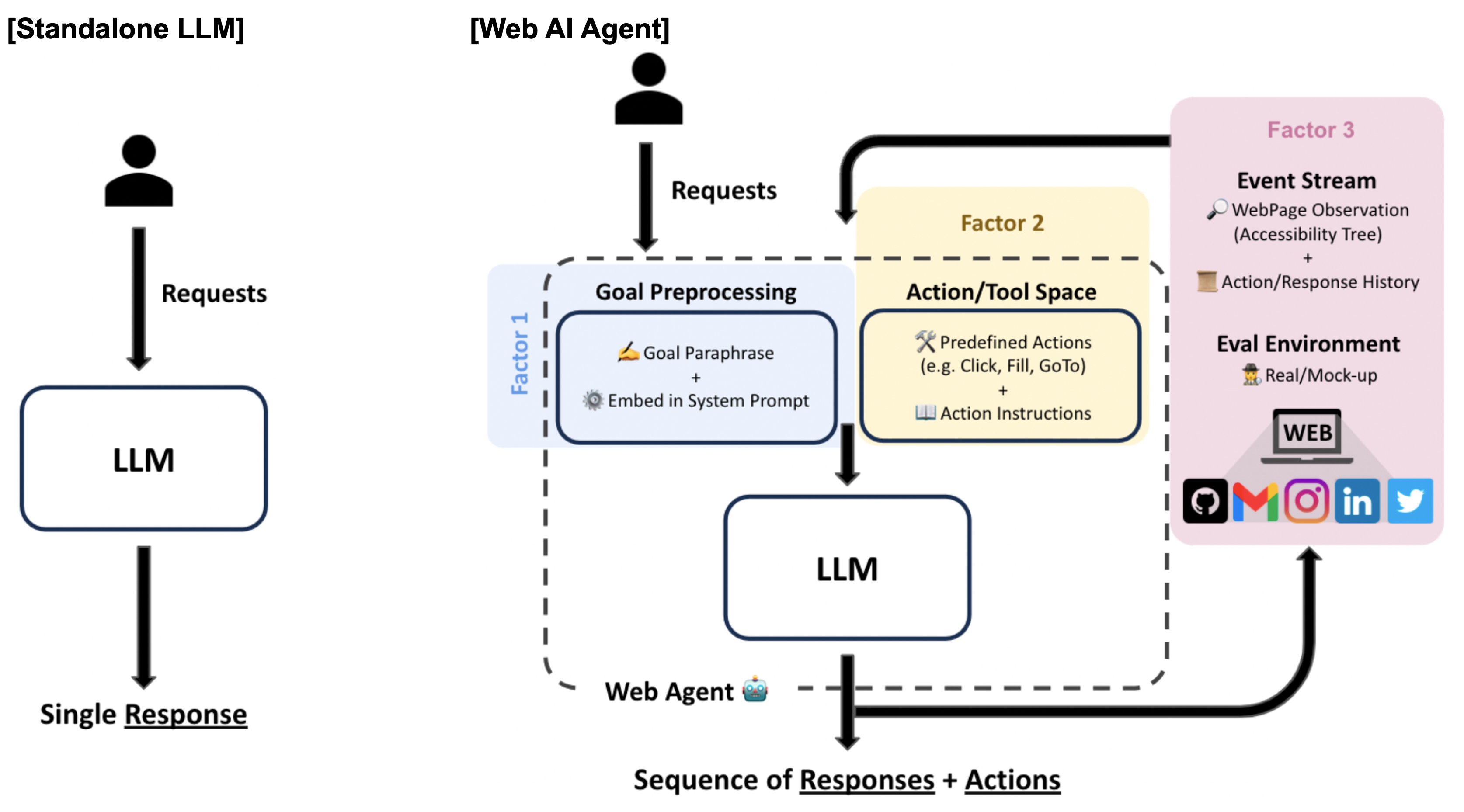

Jeffrey Yang Fan Chiang*, Seungjae Lee*, Jia-Bin Huang, Furong Huang, Yizheng Chen (*equal contribution)

ICLRw, 2025

project website / arXiv

Haritheja Etukuru, Norihito Naka, Zijin Hu, Seungjae Lee, Julian Mehu, Aaron Edsinger, Chris Paxton, Soumith Chintala, Lerrel Pinto, Nur Muhammad Mahi Shafiullah

ICRA, 2025

project website/ paper/ github

CoRL 2024 Workshop on Language and Robot Learning (🏆 Oral)

Seungjae Lee, Yibin Wang, Haritheja Etukuru, H. Jin Kim, Nur Muhammad Mahi Shafiullah, Lerrel Pinto

ICML, 2024 (🏆 Spotlight, Top 3.5%)

project website / arXiv / github / 🤗 Lerobot Library

RSS 2024 Workshop SemRob (🏆 Oral spotlights) ICML 2024 Workshop MFM-EAI (🏆️ Outstanding Paper Award - Winner)

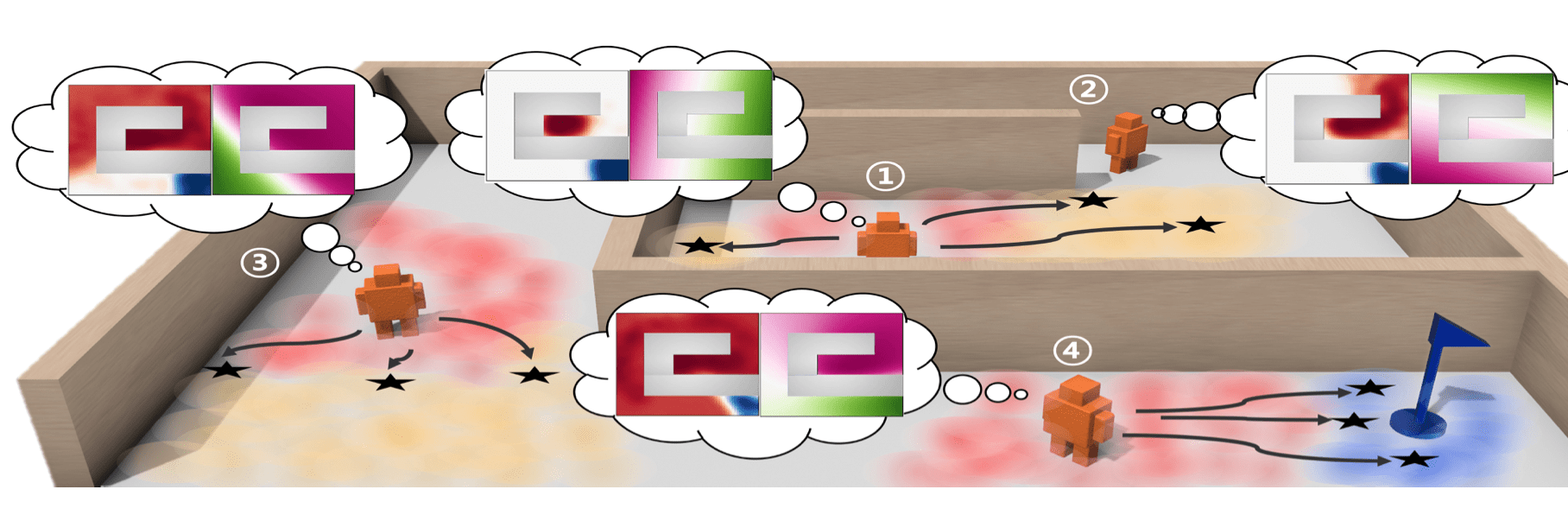

Seungjae Lee, Daesol Cho, Jonghae Park, H Jin Kim

NeurIPS, 2023

arXiv

Daesol Cho, Seungjae Lee, H Jin Kim

NeurIPS, 2023

arXiv

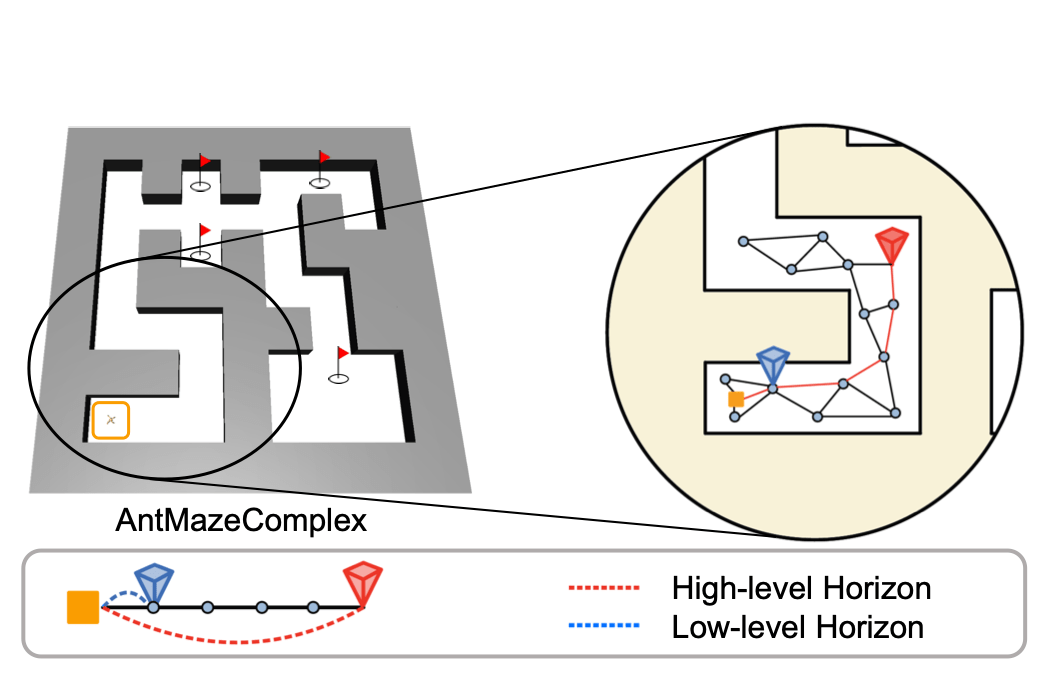

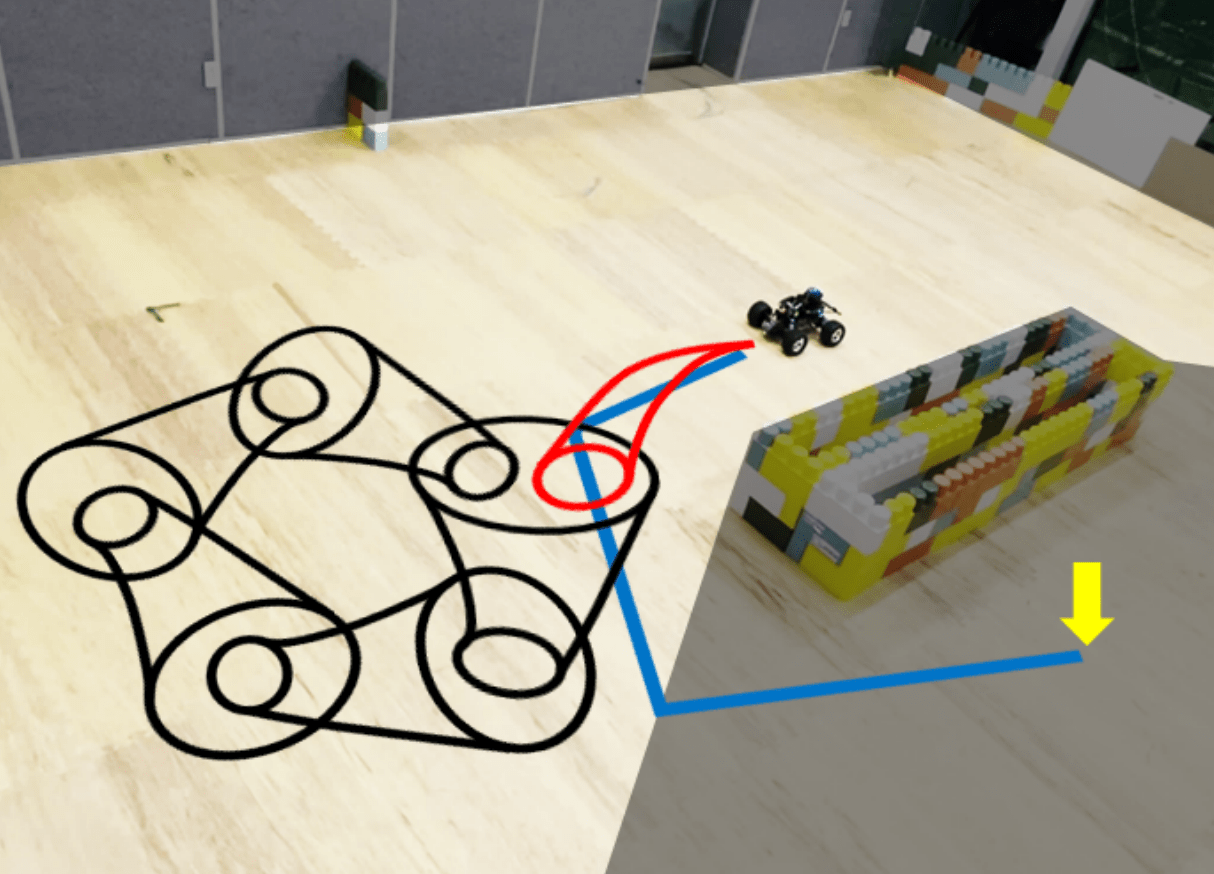

Dongseok Shim*, Seungjae Lee*, H Jin Kim (*equal contribution)

ICML, 2023

arXiv / github

Daesol Cho*, Seungjae Lee*, H Jin Kim (*equal contribution)

ICLR, 2023 (🏆 Spotlight, Top 5.65%)

arXiv / github

Seungjae Lee, Jongho Shin, Hyeong-Geun Kim, Daesol Cho, H. Jin Kim

IJCAS, 2023

Seungjae Lee, Jigang Kim, Inkyu Jang, H. Jin Kim

NeurIPS, 2022 (🏆 Oral, Top 1.76%)

arXiv / github

Inkyu Jang, Dongjae Lee, Seungjae Lee, H Jin Kim

IROS, 2021 arXiv

Experiences

Toyota Research Institute

Large Behavior Model Team Intern

May 2025 - Aug 2025 | Boston, MA

Samsung Electronics

Deep Learning Algorithm Team Intern

Jul 2020 - Sep 2020 | Gyunggi-do, Korea

Deepest

Sep 2020 - Feb 2022 | Seoul, Korea

Awards and Achievements

- [Scholarship] Dashin Songchon Foundation (Aug 2024 - Present)

- [Awards] Graduated Summa Cum Laude, Seoul National University (1st prize in Department of Aerospace Engineering)

- [Scholarship] Hyundai Motor Chung Mong-Koo Foundation (Aug 2021 - Jul2023)

- [Awards] NeurIPS Scholar Award

- [Awards] Global Excellence Scholarship 2022, Hyundai Motor Chung Mong-Koo Foundation

- [Awards] Best poster competition, SNU Artificial Intelligence Institute Spring Retreat

- [Awards] Global Excellence Scholarship 2023, Hyundai Motor Chung Mong-Koo Foundation

Academic Services

- Program Committee, RSS 2024 SemRob Workshop

- Conference reviewer for ICML'22 '24 '25

- Conference reviewer for IROS'23

- Conference reviewer for NeurIPS'23 '25

- Conference reviewer for ICLR'24 '25 '26

- Conference reviewer for ICRA'24 '25

- Conference reviewer for AAAI'25

- Conference reviewer for CORL'25

- Conference reviewer for RSS'25

- Conference reviewer for CVPR'26