VQ-BeT: Behavior Generation with Latent Actions

ICML 2024 Spotlight

Autonomous skills

[Pick up Bread → Place in the Bag → Pick up Bag → Place on the Table] (X8)

[Open Drawer → Pick and Place Box → Close Drawer] (X8)

[Open Microwave → Pick up Bread → Place on the Table] (X8)

[Can to Fridge → Fridge Closing → Toaster Opening] (X5)

[Can to Toaster → Toaster Closing → Fridge Closing] (X5)

Abstract

Generative modeling of complex behaviors from labeled datasets has been a longstanding problem in decision-making. Unlike language or image generation, decision-making requires modeling actions – continuous-valued vectors that are multimodal in their distribution, potentially drawn from uncurated sources, where generation errors can compound in sequential prediction. A recent class of models called Behavior Transformers (BeT) addresses this by discretizing actions using k-means clustering to capture different modes. However, k-means struggles to scale for high-dimensional action spaces or long sequences and lacks gradient information, and thus BeT suffers in modeling long-range actions. In this work, we present Vector-Quantized Behavior Transformer (VQ-BeT), a versatile model for behavior generation that handles multimodal action prediction, conditional generation, and partial observations. VQ-BeT augments BeT by tokenizing continuous actions with a hierarchical vector quantization module. Across seven environments including simulated manipulation, autonomous driving, and robotics, VQ-BeT improves on state-of-the-art models such as BeT and Diffusion Policies. Importantly, we demonstrate VQ-BeT’s improved ability to capture behavior modes while accelerating inference speed 5× over Diffusion Policies.

Method

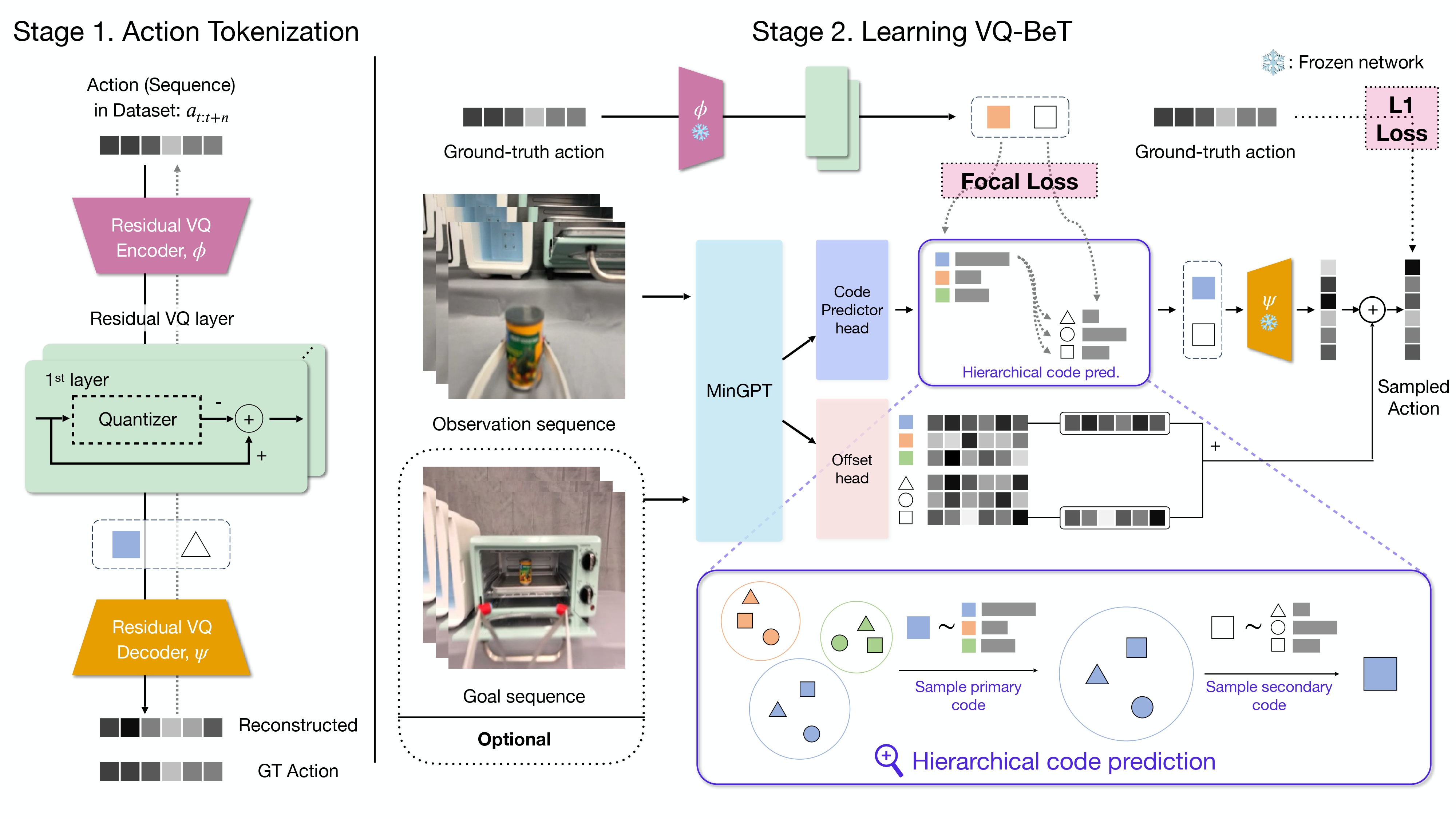

Overview of VQ-BeT, broken down into the residual VQ encoder-decoder training phase and the VQ-BeT training phase. The same architecture works for both conditional and unconditional cases with an optional goal input. In the bottom right, we show a detailed view of the hierarchical code prediction method.

Experiment Results

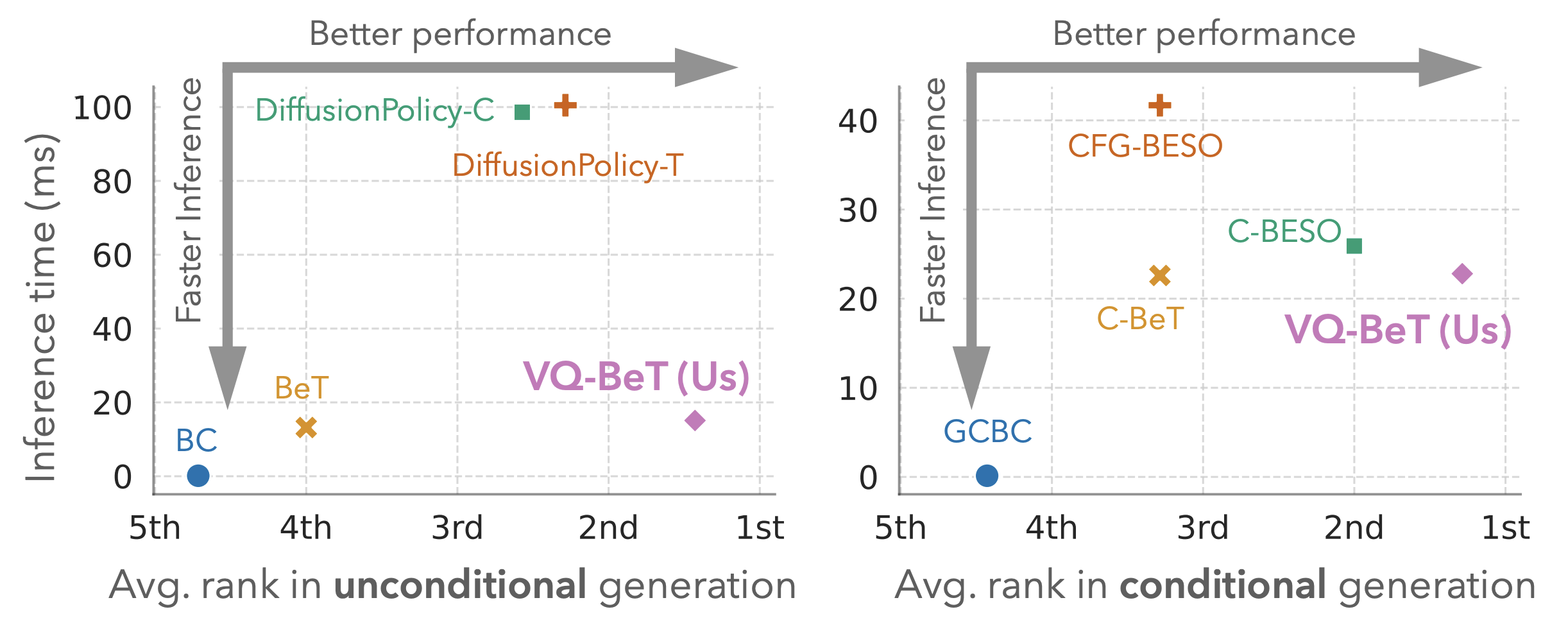

We show two plots comparing VQ-BeT and relevant baselines on unconditional and goal-conditional behavior generation. The comparison axes are (x-axis) relative success represented by average rank on a suite of seven simulated tasks, and (y-axis) inference time

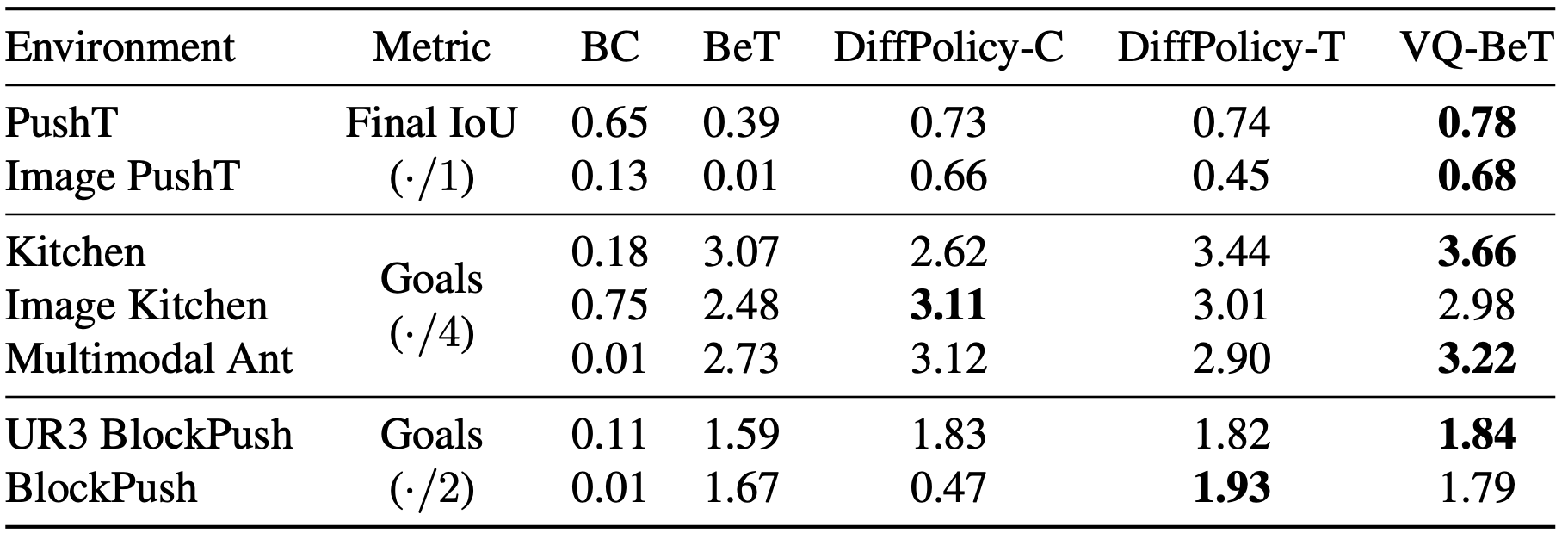

Evaluation of unconditional tasks in simulation environments of VQ-BeT and related baselines.

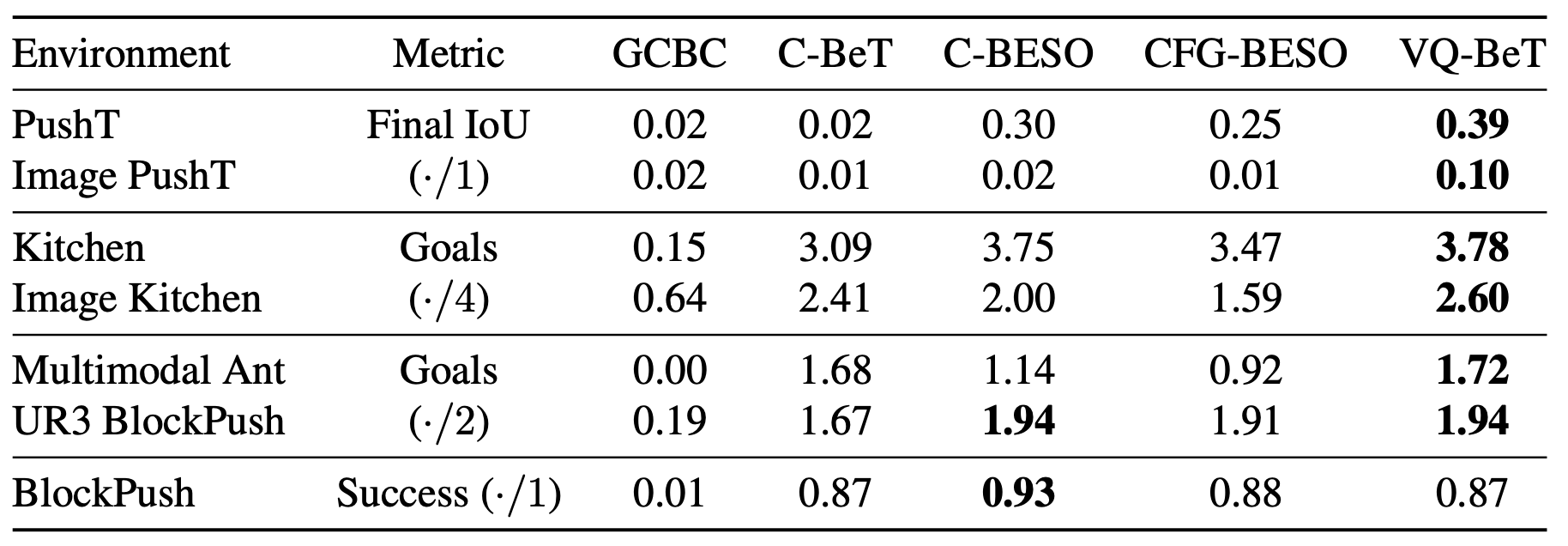

Evaluation of conditional tasks in simulation environments of VQ-BeT and related baselines.